Introduction

Logistic regression is a parametric classification method that is used to estimate binary class output given real-valued input vectors. In this post, cross entropy loss is used as the loss function and gradient descent (or batched gradient descent) is used to learn parameters.

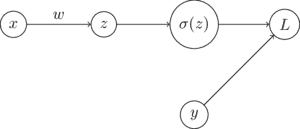

The computation graph below shows how logistic regression works. The dot product of each input, a vector of size , and weight vector transposed is taken, resulting in . is put through the sigmoid function, and the loss is calculated using the mean squared error on label and .

The bias term is ignored for the purposes of this post, but can easily be appended to weight vector after appending a to an input vector .

Learning

Given a data set of size with dimensions, parameters must be learned that minimize our loss function . The weight vector is learned using gradient descent. The derivation for term in the weight update is displayed in the derivations section of this post.

Code

Code for a logistic regression classifier is shown in the block below

from typing import List

from tqdm import trange

import torch

def ErrorRate(y: torch.Tensor, yhat: torch.Tensor) -> float:

""" Calculate error rate (1 - accuracy)

Args:

y: true labels

yhat: predicted labels

Returns:

error rate

"""

return torch.sum((y != yhat).float()) / y.shape[0]

class LogisticRegressionClassifier:

def __init__(self) -> None:

""" Instantiate logistic regression classifier

"""

self.w = None

self.calcError = ErrorRate

def fit(self, x, y, alpha=1e-4, epochs=1000, batch=32) -> None:

""" Fit logistic regression classifier to dataset

Args:

x: input data

y: input labels

alpha: alpha parameter for weight update

epochs: number of epochs to train

batch: size of batches for training

"""

self.w = torch.rand((1, x.shape[1]))

epochs = trange(epochs, desc='Error')

for epoch in epochs:

start, end = 0, batch

for b in range((x.shape[0]//batch)+1):

hx = self.probs(x[start:end])

dw = self.calcGradient(x[start:end], y[start:end], hx)

self.w = self.w - (alpha * dw)

start += batch

end += batch

hx = self.predict(x)

error = self.calcError(y, hx)

epochs.set_description('Err: %.4f' % error)

def probs(self, x: torch.Tensor) -> torch.Tensor:

""" Determine probability of label being 1

Args:

x: input data

Returns:

probability for each member of input

"""

hx = 1 / (1 + torch.exp(-torch.einsum('ij,kj->i', x, self.w)))[:, None]

return hx

def predict(self, x: torch.Tensor) -> torch.Tensor:

""" Predict labels

Args:

x: input data

Returns:

labels for each member of input

"""

hx = self.probs(x)

hx = (hx >= 0.5).float()

return hx

def calcGradient(self, x: torch.Tensor, y: torch.Tensor, hx: torch.Tensor) -> torch.Tensor:

""" Calculate weight gradient

Args:

x: input data

y: input labels

hx: predicted probabilities

Returns:

tensor of gradient values the same size as weights

"""

return torch.sum(x * (hx - y), dim=0) / x.shape[0]

Derivations

Derivative of loss function with respect to sigmoid output :

Derivative of sigmoid function with respect to sigmoid input :

Derivative of linear combination with respect to weight :

Derivative of loss function with respect to weight :

Resources

- Jurafsky, Daniel, and James H. Martin. Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition. Pearson, 2020.

- Russell, Stuart J., et al. Artificial Intelligence: A Modern Approach. 3rd ed, Prentice Hall, 2010.